PrepWAI began as a simple idea: What if a student or job seeker could practice interviews with an AI that behaves like a real interviewer, understands their resume, reads the job description, remembers past interviews, and gives personalized feedback after every session?

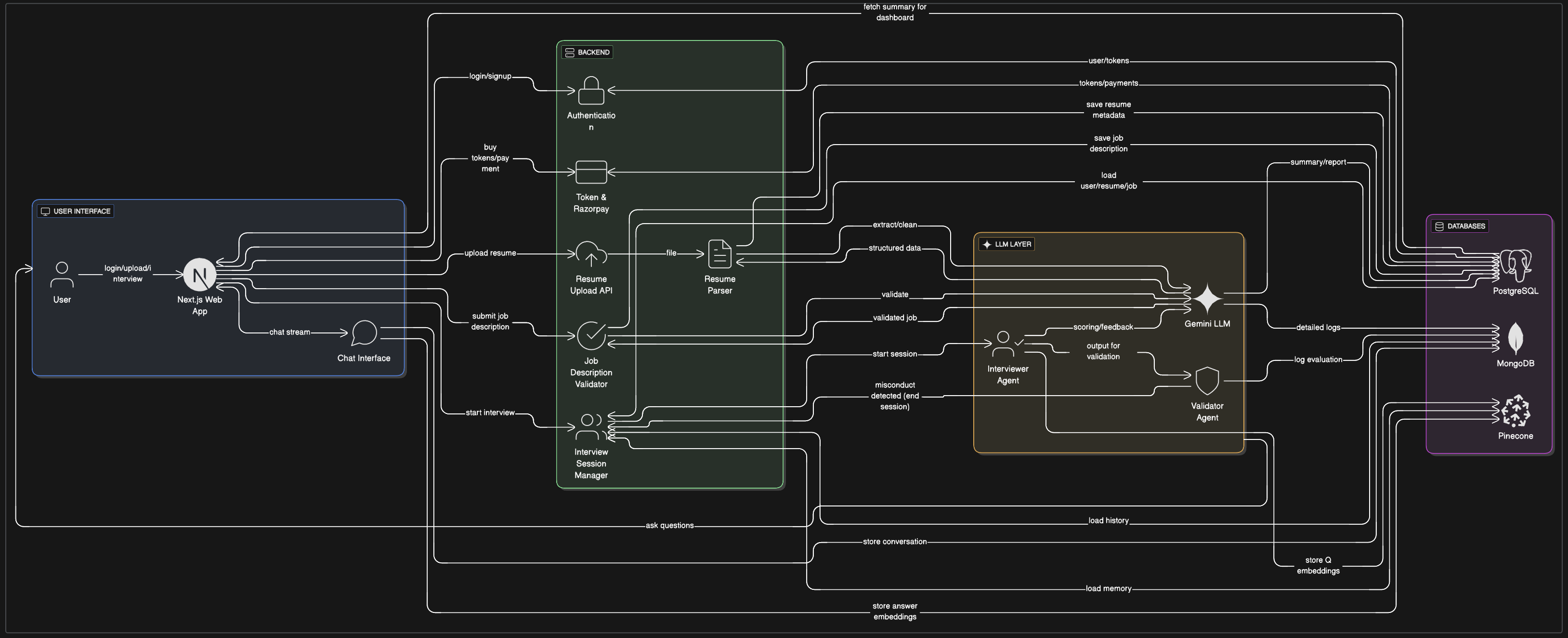

Turning that idea into a production-ready system required building a fully automated pipeline from login to final scoring, powered by LLMs, vector search, multiple databases, and a dual-agent monitoring system.

1. User Login and Dashboard

The platform starts with a standard authentication flow. After login, the user lands on their dashboard where they see remaining tokens, past interviews, and a resume upload section. The dashboard calls the backend to fetch user information from Postgres, which stores all user-related data including payments, tokens, and resume metadata.

2. Resume Upload and Parsing

The user uploads a resume (PDF, DOCX, etc.), triggering a multi-step pipeline:

- Step 1: The file is uploaded and stored securely.

- Step 2: An ORM-powered parser extracts raw text from the document.

- Step 3: The extracted text is passed to the LLM to identify work experience, skills, projects, and achievements.

- Step 4: Cleaned and structured resume data is stored in Postgres.

This allows the system to bring context into the interview without repeatedly parsing the file.

3. Interview Details and Validation

Before starting, the user fills in the target position and job description. The backend validates these inputs to ensure the job description contains required skills and responsibilities, ensuring the interview questions map accurately to the role.

4. The Dual Agent Architecture

The core of PrepWAI is its Dual Agent System. To ensure the AI behaves like a professional interviewer, we split the responsibilities between two distinct agents working in parallel:

Agent 1: The Interviewer

The primary driver. It asks questions, analyzes responses, maintains tone, and uses context from the resume and job description to drive the session. It acts as the "creative" brain.

Agent 2: The Validator

The middleware safety net. It checks output structure, rule compliance, and ensures the interviewer remains polite and professional. It prevents hallucinations and enforces the JSON schema.

The diagram on the right illustrates the complete lifecycle, from the initial user input to the final evaluation loop.

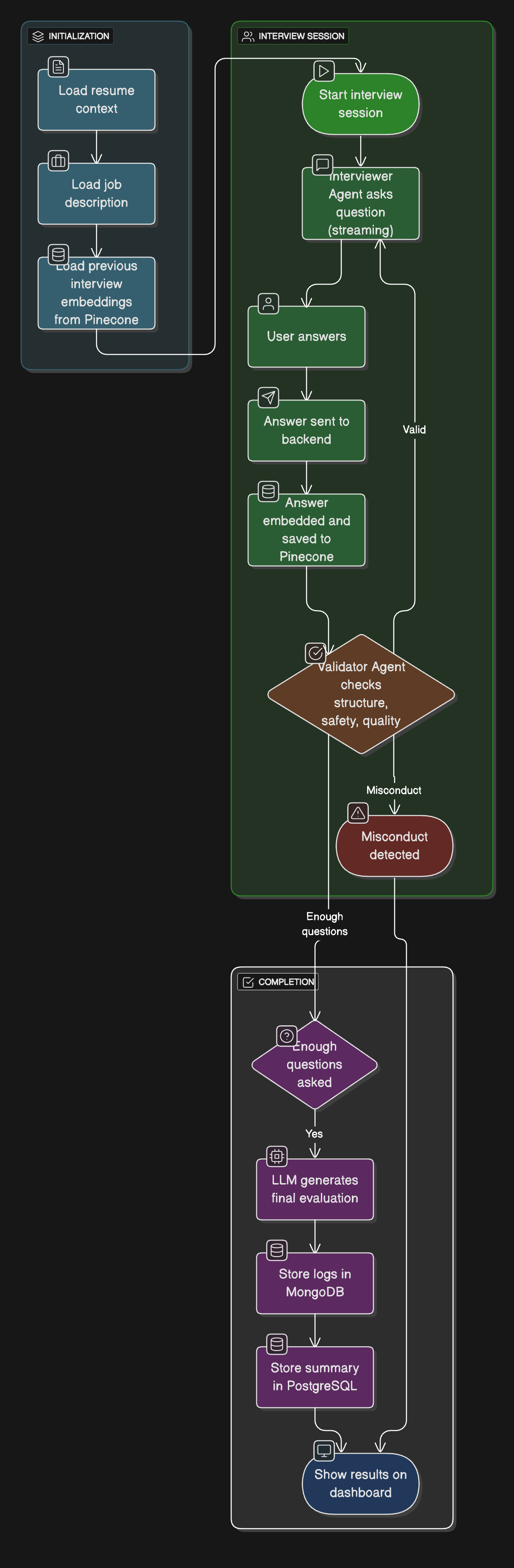

5. Context Storage in Pinecone (Vector DB)

Every question and answer is embedded and stored in Pinecone. This allows the interviewer to query past data during the session. For example, if the candidate is asked a system design question, the LLM can look up how they answered a similar question in a previous session and adapt its evaluation.

6. Monitoring User Behavior

Safety is critical. If the candidate uses abusive language, threatens, or misbehaves, the system immediately detects the violation, ends the interview, and logs the event. This ensures interactions remain professional and controlled.

7. Ending the Interview

The session concludes when the interviewer decides enough questions were asked, the evaluation agent determines the candidate has reached the limit, or a violation occurs. Once ended, the evaluation output is triggered.

8. Final Evaluation and Scoring

The system generates an overall score, strengths, weaknesses, and topic-wise performance. This detailed result is stored in MongoDB, which handles the heavy lifting of interview logs and detailed context, while Postgres keeps the high-level summary for the dashboard.

9. Database Strategy

PrepWAI uses a polyglot persistence strategy to stay efficient:

- Postgres: Structured data (User accounts, payments, resume metadata).

- MongoDB: Unstructured/Semi-structured data (Interview logs, conversation history, evaluations).

- Pinecone: Vector data (Embeddings for long-term memory and context retrieval).

10. Token System and Payments

Users purchase tokens via Razorpay to fund their sessions. Each interview deducts a fixed number of tokens. We use Postgres to track payment status, transaction IDs, and granular token usage history, providing complete transparency to the user.

Conclusion

Building PrepWAI pushed me into designing a real end-to-end AI system, not just a chat interface. By combining LLM-powered reasoning, multi-agent validation, and a specialized database strategy, the result is an AI interviewer that behaves consistently, adapts to the user, and guides them to improve with every session.